Today, artificial intelligence represents both a security threat and a solution. But what does the tech mean for the future of the industry?

In 1971, Bob Thomas wrote a self-replicating program designed to move through ARPANET (Advanced Research Projects Agency Network), a predecessor of the modern internet. While it wasn’t malicious, the program did leave behind a message: "I'M THE CREEPER: CATCH ME IF YOU CAN."

The events that followed helped define the cybersecurity industry as we know it today. In response to Creeper, Ray Tomlinson created Reaper—the world's first antivirus program.

While it’s certainly a great piece of trivia, we're specifically interested in what Creeper and Reaper represent: the cyclical nature of cybersecurity. New technology introduces new threats. New threats expose new vulnerabilities. And new vulnerabilities prompt the development of new security solutions.

But before we explore that further, let's jump ahead 30 years to 2004, when a group of international hackers is believed to have sent the Cabir worm directly to antivirus developers. Cabir is credited as the first computer worm to infect mobile phones, and the threat that gave rise to mobile security.

Speaking of mobile phones, we can’t forget the smartphone. The launch of iOS and Android was swiftly followed by the rise of rooting and jailbreaking.

All this is to say that the cybersecurity industry is, for the most part, a reactive one. Today, artificial intelligence (AI) stands as the "new" tech. And despite the widespread panic, history suggests cybersecurity will, in fact, rise to the occasion—just as it has done time and time again.

A crystal ball would be nice, but expert insight is close enough. Clear themes are emerging as new technologies shape the threat landscape. Find out more in the webinar: Cybersecurity Predictions for 2024.

From the age of automation to the age of AI

For the past decade, there’s been an evolving conversation around the "age of automation". As the Harvard Business Review notes, predictions that automation would eliminate 14% of jobs globally and radically transform another 32% didn’t account for the developments seen today with generative AI tools like ChatGPT, Gemini, and others.

Today, automation tools streamline incident detection, response, and reporting, enabling real-time security. But there’s a flip side: AI lowers the technical bar for cybercriminals. There are already extensive reports of AI-assisted cybercrime, where we see gen AI like ChatGPT being used to develop highly targeted phishing attacks and Python scripts for malware attacks.

Despite these challenges, AI presents unique opportunities for the cybersecurity industry, particularly in proactive security. For example, it can detect vulnerabilities in existing code during the development process and analyze large-scale data, boosting automation and improving scalability—all while reducing cybersecurity costs.

How will the cybersecurity industry adapt to AI?

Phase 1: New tech

Generative AI is the hot "new" technology. In 2023, ChatGPT set the record for fastest growing user base ever at that time. In many ways, the explosion of AI can be compared to technological advancements like smartphones and smart TVs.

Just as smartphones revolutionized communication and introduced security challenges like mobile malware and app vulnerabilities, generative AI is transforming data generation and interaction. ChatGPT, Gemini, and similar platforms have sparked both excitement and apprehension in the cybersecurity community.

The rapid adoption of generative AI is not just a matter of new capabilities but also speed and scale. Traditional technologies evolved over years or even decades, giving cybersecurity professionals time to develop countermeasures. Generative AI’s swift integration into business and life means that both the threats and solutions must evolve in a way that keeps pace.

Phase 2: New threats

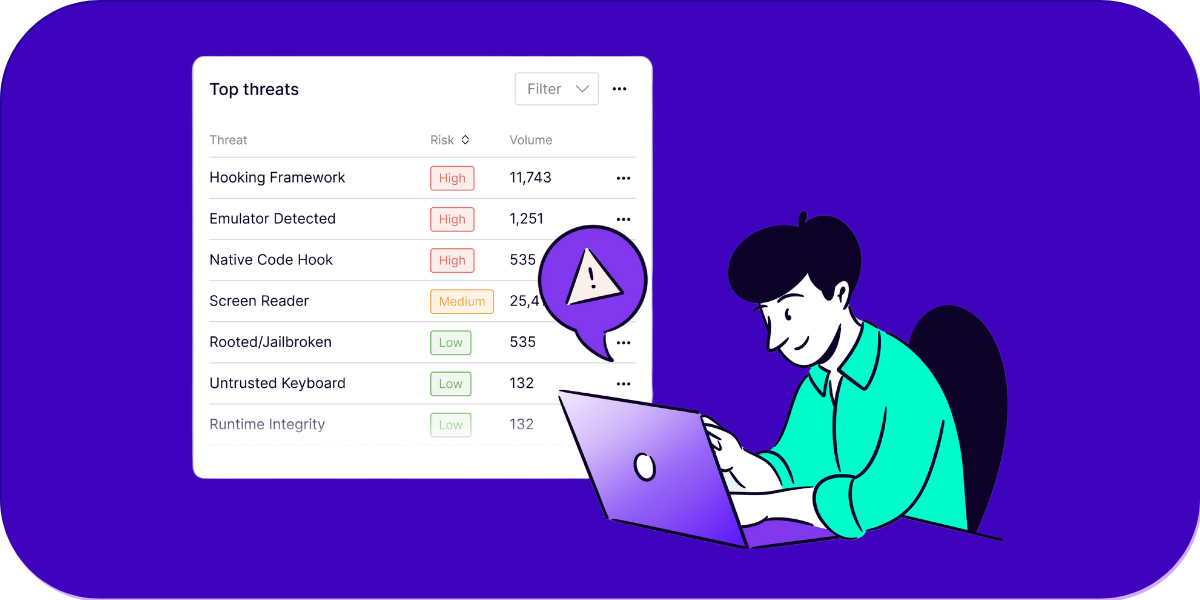

AI introduces several new cybersecurity threats, including:

- Automated reverse engineering: AI can analyze software to identify vulnerabilities and exploit them, accelerating the process for cybercriminals. For example, it can analyze a code base and provide a user specific instructions on how to use that information for malicious code injections or repackaging.

- Deep fakes: AI-generated videos and audios that are nearly indistinguishable from real footage can be used for malicious purposes like impersonation and spreading misinformation. There are already stories of companies paying ransoms due to deep fake conversations engineered to look like their employees, and broader concerns about election-related issues also emerges.

- Generated text for social engineering: AI tools like ChatGPT can create highly convincing phishing emails and messages, making social engineering attacks more effective.

- Bypassing KYC checks with image generation: AI can generate images that can fool facial recognition systems and bypass know your customer (KYC) checks, posing significant risks to financial institutions. In fact, reports have surfaced of services selling fake passports and IDs for small sums of money that can evade detection. One recent example, OnlyFake, shows how AI tools can be used to create identity documents so convincing they deceive financial institutions and government agencies.

AI-driven threats are inherently adaptive and can evolve in response to defensive measures. As a result, cybersecurity professionals must shift the way they think to anticipate potential threats and develop flexible, forward-thinking strategies. It underscores the need for a paradigm shift from reactive to proactive cybersecurity measures, and finding creative ways to leverage AI to stay ahead of hackers and other attacks.

Phase 3: New vulnerabilities

The new threats brought by AI expose various vulnerabilities, including:

- Human layer vulnerabilities: One area that has come to light is the urgent need for effective cybersecurity awareness training. AI-assisted attacks exploit human psychology, making it easier to deceive individuals not adequately trained to recognize such threats. Training workers and individuals to recognize these threats—or take steps to validate information before taking action—will become more vital.

- Ineffective validation: As AI-generated content becomes more realistic, traditional validation is becoming less reliable. For example, verifying the authenticity of videos or images is becoming increasingly difficult, leading to potential security breaches. Increasingly sophisticated validation solutions are needed.

- Technical vulnerabilities: AI systems themselves can be targeted. For instance, adversarial attacks involve manipulating input data to deceive AI models, while model poisoning corrupts the training data to compromise the AI’s integrity and functionality.

As a result, many organizations are reevaluating cybersecurity frameworks that may rely on static defenses that are insufficient against the dynamic nature of AI-driven threats. This evolution requires a multi-layered approach to security that integrates AI-based defenses and greater training with traditional methods to protect against both known and unknown threats.

Phase 4: New solutions

The good news is that security vendors are adapting their solutions to incorporate AI, enhancing their capabilities in several ways:

- Automated incident response systems: AI-driven tools can quickly detect and respond to incidents, reducing the time between detection and mitigation. For example, an AI system can automatically isolate infected devices from the network to prevent the spread of malware.

- Advanced threat detection: AI can analyze vast amounts of data to identify patterns and anomalies that indicate potential threats. Predictive analytics or behavioral analysis, for instance, can forecast and mitigate risks before they materialize into actual attacks.

- Proactive security measures: AI-powered tools can continuously monitor network traffic, user behavior, and system logs to detect suspicious activities in real-time. This proactive approach helps in identifying and neutralizing threats.

AI isn’t just enhancing existing solutions. AI can be used to create dynamic security policies that adjust in real-time based on the threat environment, allowing organizations to be agile and responsive to new threats. It can also be used for resource allocation, so that technical teams are adapting in real-time to a constantly shifting threat landscape.

Phase 5: Repeat

Just when it seems that you’ve arrived at the end of the process, it starts again when new tech is introduced. AI-powered threats demand that organizations find strategies for continuous adaptation and improvement in cybersecurity measures. Just as smartphones and cloud computing transformed cybersecurity, AI will drive another evolution in threat detection and mitigation strategies.

One look at the potential for the technical implementation of AI-assisted threats underscores how critical it is to stay vigilant. Organizations need plans and partnerships in place that help them adopt preventative measures, industry best practices, and AI-powered tools to stay ahead of emerging threats. In a world of dynamic threats, cybersecurity professionals need a plan for dynamic response. And the cost of the reputational and financial risks posed by AI-assisted attacks is too great to ignore.

What comes after AI?

Right now, all eyes are on AI but it’s critical to keep an eye on the horizon. Technological innovation will only accelerate, in part powered by AI and supported by other advancements. Areas for leaders to be mindful of include:

Quantum computing

Quantum computers have presented a known threat to cybersecurity for years. But recent data suggests that their advancement may be faster than expected. A quantum computer could potentially break current and widely used cryptographic algorithms such as RSA (Rivest–Shamir–Adleman), almost instantly making existing encryption methods obsolete. For leaders, this highlights the need to explore whether it’s time to adopt quantum-resistant cryptographic algorithms. This shift is important because encrypted data could be captured now and decrypted later when the technology allows.

Nation-states as threat actors

In a year with critical elections around the world and multiple conflicts, it’s important to recognize the risk that AI and future-focused tools pose. Deepfakes could be used to influence election outcomes. Malware could be used to impact critical infrastructure or essential services. As technical talent uncovers ways to use AI to uncover vulnerabilities at scale, they’ll face important questions about whether to share that information with ethical hacking organizations or monetize it by selling to cybercriminals.

Supply-chain attacks

Disrupting supply chains in critical industries is another fast-growing target. Supply-chain attacks exploit the trust relationships between organizations and their suppliers, introducing vulnerabilities that can be difficult to detect and mitigate. The SolarWinds Orion hack saw suspected nation-state actors infiltrate thousands of organizations, including U.S. government agencies, by exploiting Orion’s system management tools to access critical systems and data. Organizations will need to consider comprehensive supply-chain security measures, such as rigorous vetting of suppliers, continuous monitoring for vulnerabilities, and collaborative security efforts.

Pre-installed hardware and software vulnerabilities

As hardware with pre-installed apps and software becomes more common, it introduces another layer of risk. These pre-installed components can serve as vectors for cyberattacks, allowing malicious actors to exploit vulnerabilities before they’re even identified. As a result, ensuring hardware and software security from manufacturing to deployment requires a comprehensive approach, involving everything from analyzing penetration testing results for specific SDK components to continuous threat monitoring.

Preparing for the future

As we prepare for the future beyond AI, strategic foresight is becoming cybersecurity’s highest value capital. Anticipating potential threats and understanding the implications of emerging technologies enables organizations to stay ahead. The integration of AI, quantum computing, and other advanced technologies into cybersecurity strategies will continue to support the integrity and security of digital ecosystems.

The future of cybersecurity lies in the ability to anticipate, adapt, and innovate. Rapid technology innovation means constantly introducing new challenges, but it also provides opportunities to enhance defenses and develop new protective solutions.

With AI, quantum computing, and more innovation on the horizon, it’s vital to keep tabs on the threat landscape. Find out more in the webinar Cybersecurity Predictions for 2024.